Deploying MongoDB on Kubernetes

Posted by NetworkWhois on

After spending countless hours tweaking and optimizing MongoDB deployments on K3s for various projects, I've finally nailed down a reliable approach that I wish I had known when I started. Today, I'm sharing my battle-tested method for deploying MongoDB on K3s, including all the tricks and pitfalls I've discovered along the way.

If you haven't set up K3s yet, check out my previous guide on installing K3s on Ubuntu first. Once you have that ready, let's dive into deploying MongoDB.

Why MongoDB on K3s?

Before jumping into the deployment steps, let me share why I chose this setup. For my microservices architecture, I needed a database solution that was both scalable and easy to manage. Running MongoDB on K3s gives me the best of both worlds - MongoDB's flexibility and K3s's lightweight orchestration. Plus, the automatic failover capabilities have saved me from many potential disasters!

Prerequisites

- A running K3s cluster

- kubectl configured and ready

- At least 4GB RAM available for MongoDB

- Storage space for persistent volumes

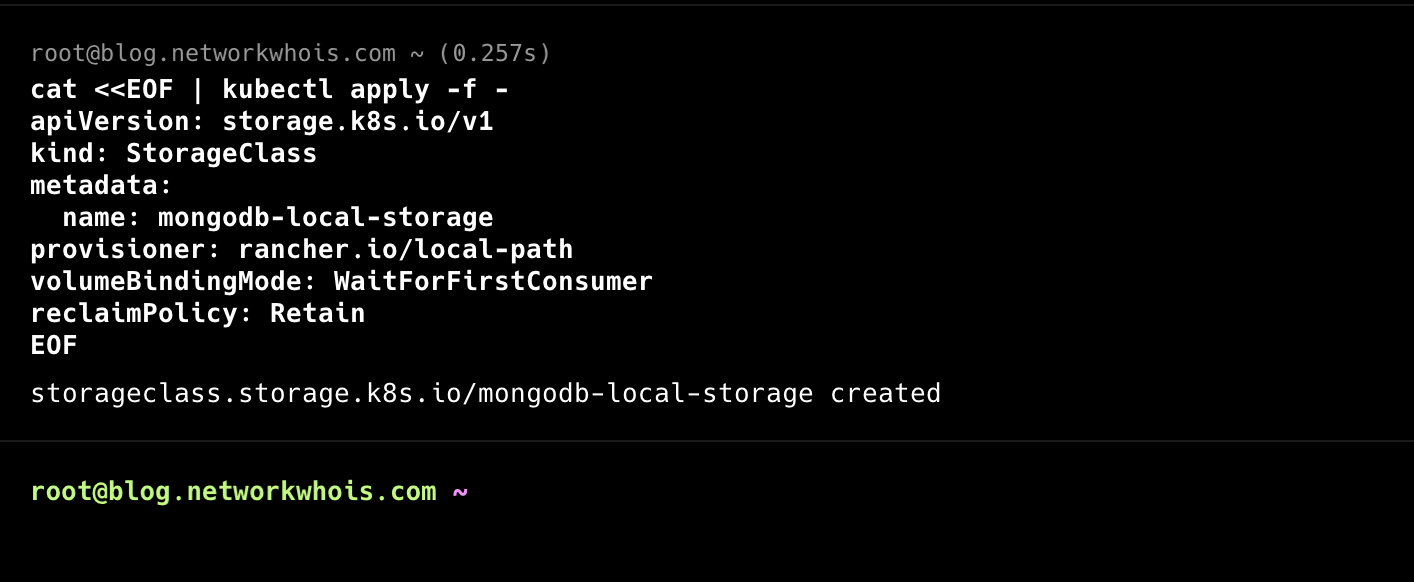

Step 1: Setting Up the Storage

First, let's create a StorageClass for MongoDB. I've learned that proper storage configuration is crucial for database performance:

cat <<EOF | kubectl apply -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: mongodb-local-storage

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Retain

EOF

I use WaitForFirstConsumer binding mode because it helps prevent scheduling issues I encountered with immediate binding. Trust me, this saves headaches later!

Step 2: Creating a Namespace

I always isolate my database deployments in their own namespace:

kubectl create namespace mongodbStep 3: MongoDB Deployment

Here's my production-tested MongoDB deployment configuration:

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mongodb

namespace: mongodb

spec:

serviceName: mongodb

replicas: 1

selector:

matchLabels:

app: mongodb

template:

metadata:

labels:

app: mongodb

spec:

containers:

- name: mongodb

image: mongo:6.0

ports:

- containerPort: 27017

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: mongodb-secret

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mongodb-secret

key: password

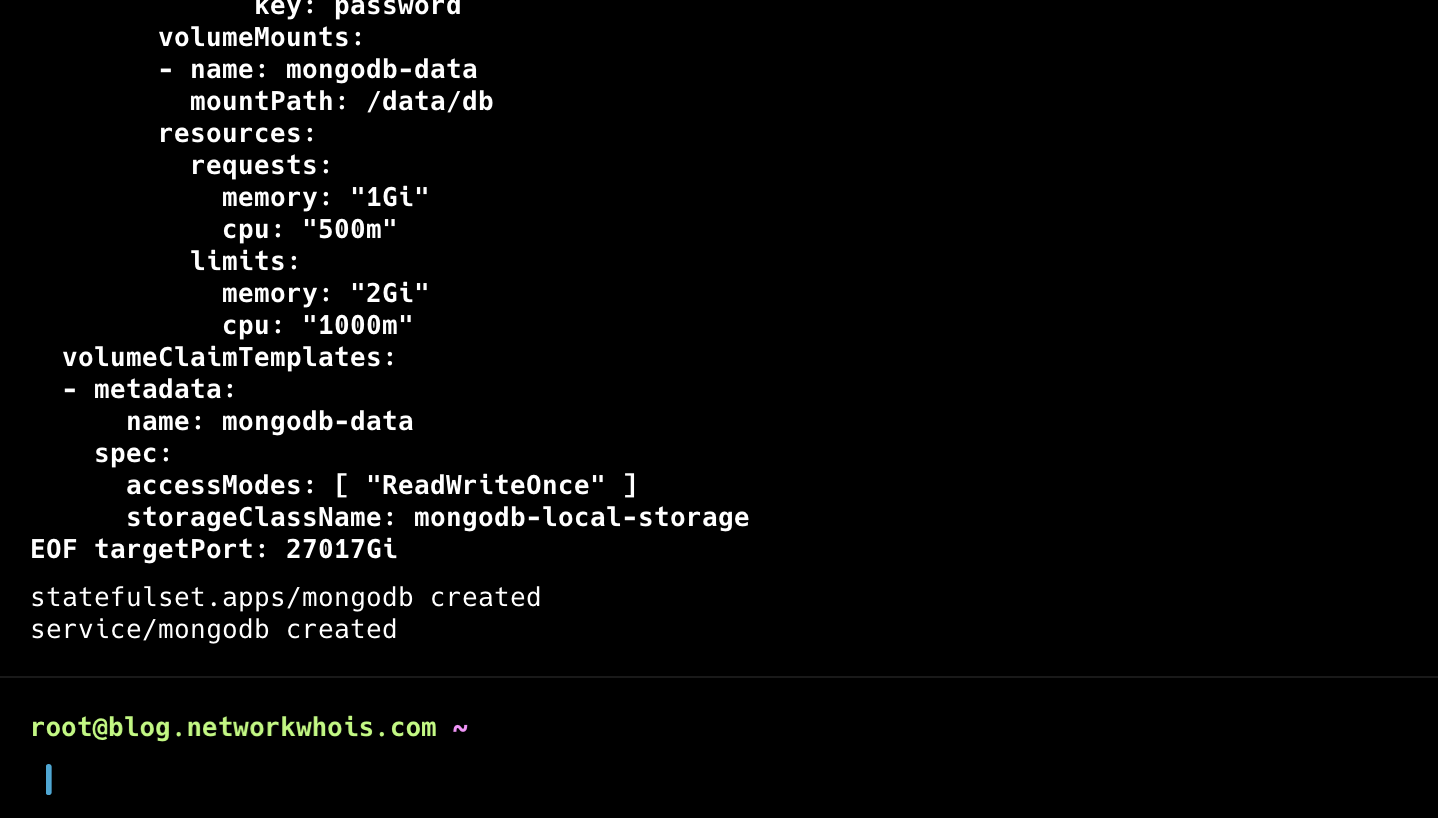

volumeMounts:

- name: mongodb-data

mountPath: /data/db

resources:

requests:

memory: "1Gi"

cpu: "500m"

limits:

memory: "2Gi"

cpu: "1000m"

volumeClaimTemplates:

- metadata:

name: mongodb-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: mongodb-local-storage

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: Service

metadata:

name: mongodb

namespace: mongodb

spec:

clusterIP: None

selector:

app: mongodb

ports:

- port: 27017

targetPort: 27017

EOF

Never deploy MongoDB without resource limits! I once had a runaway query that brought down my entire node because I forgot to set them.

Step 4: Creating the Secret

Create a secret for MongoDB credentials:

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: mongodb-secret

namespace: mongodb

type: Opaque

data:

username: $(echo -n "adminuser" | base64)

password: $(echo -n "your-secure-password" | base64)

EOF

Replace 'your-secure-password' with a strong password. I use a password manager to generate and store these credentials.

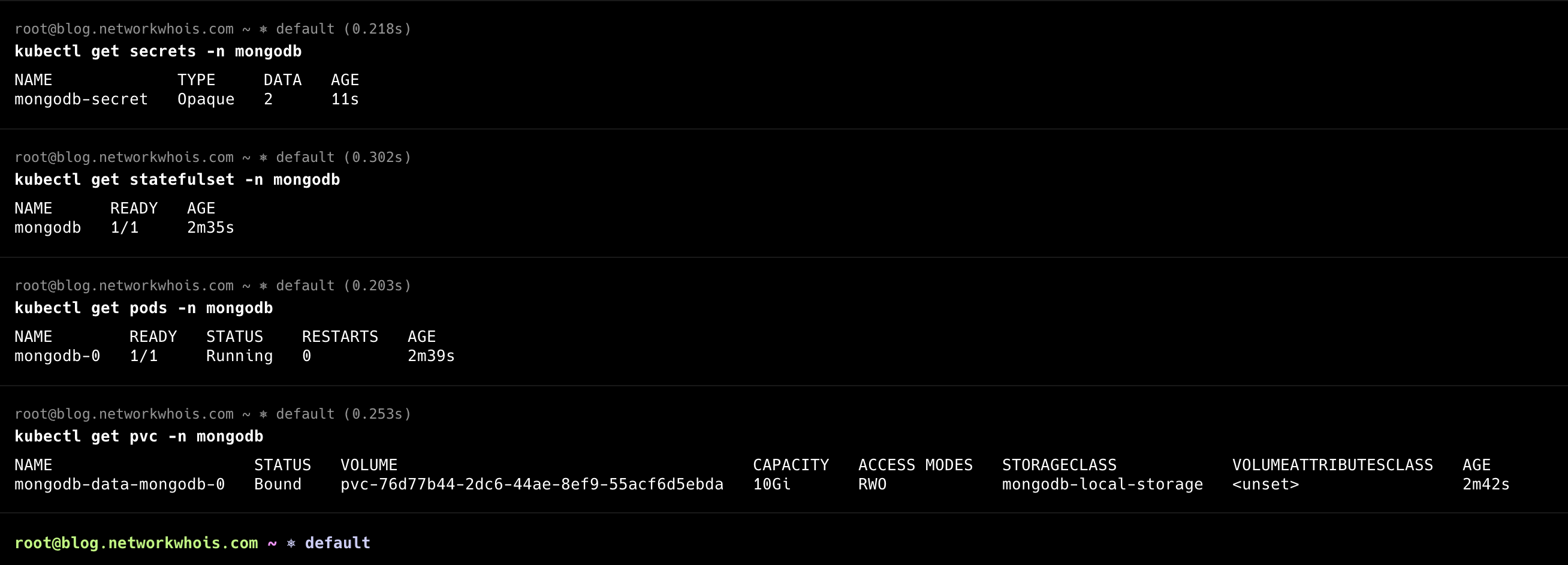

Step 5: Verifying the Deployment

Check if everything is running correctly:

# Check the StatefulSet

kubectl get statefulset -n mongodb

# Check the pod

kubectl get pods -n mongodb

# Check the PVC

kubectl get pvc -n mongodb

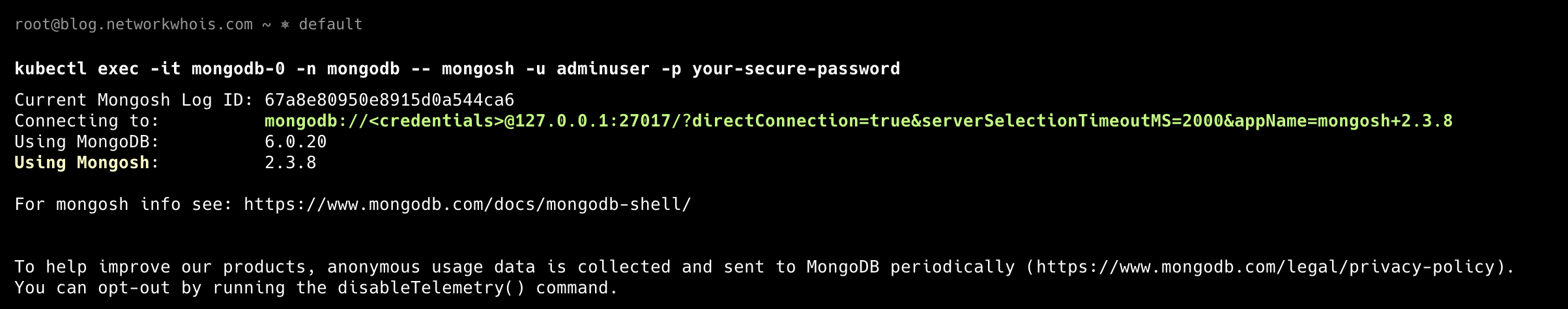

Step 6: Accessing MongoDB

To connect to MongoDB from within the cluster:

kubectl exec -it mongodb-0 -n mongodb -- mongosh -u adminuser -p your-secure-password

For development purposes, I often set up a port-forward to access MongoDB locally:

kubectl port-forward -n mongodb svc/mongodb 27017:27017Troubleshooting Common Issues

Here are some issues I've encountered and their solutions:

1. Pod Won't Start

kubectl describe pod mongodb-0 -n mongodb

kubectl logs mongodb-0 -n mongodb2. Storage Issues

kubectl describe pvc mongodb-data-mongodb-0 -n mongodbBackup Strategy

Here's the backup script I use (save it as mongodb-backup.sh):

#!/bin/bash

TIMESTAMP=$(date +%Y%m%d_%H%M%S)

BACKUP_DIR="/backup"

kubectl exec -n mongodb mongodb-0 -- mongodump \

--username $MONGODB_USER \

--password $MONGODB_PASSWORD \

--out /dump

kubectl cp mongodb/mongodb-0:/dump $BACKUP_DIR/mongodb_backup_$TIMESTAMPProduction Considerations

Before going to production, consider these points I've learned the hard way:

- Set up proper monitoring using Prometheus and Grafana

- Implement regular backup procedures

- Configure network policies to restrict access

- Use anti-affinity rules if running multiple replicas

- Regularly test your backup and restore procedures

Scaling MongoDB

When your application grows, you might want to set up a replica set. Here's a quick overview of how to modify the deployment for replication:

spec:

replicas: 3

template:

spec:

containers:

- name: mongodb

command:

- mongod

- "--replSet"

- "rs0"

- "--bind_ip"

- "0.0.0.0"I'll cover MongoDB replication in detail in a future article. It's a topic that deserves its own deep dive!

While deploying MongoDB on K3s might seem daunting at first, it becomes quite manageable once you understand the key components. I've been running this setup in production for over a year now, and it's been rock solid. Remember to always backup your data and monitor your deployment closely.

Have you deployed MongoDB on K3s? I'd love to hear about your experience in the comments below. What challenges did you face, and how did you overcome them?